BirdsEye

https://vimeo.com/631145915

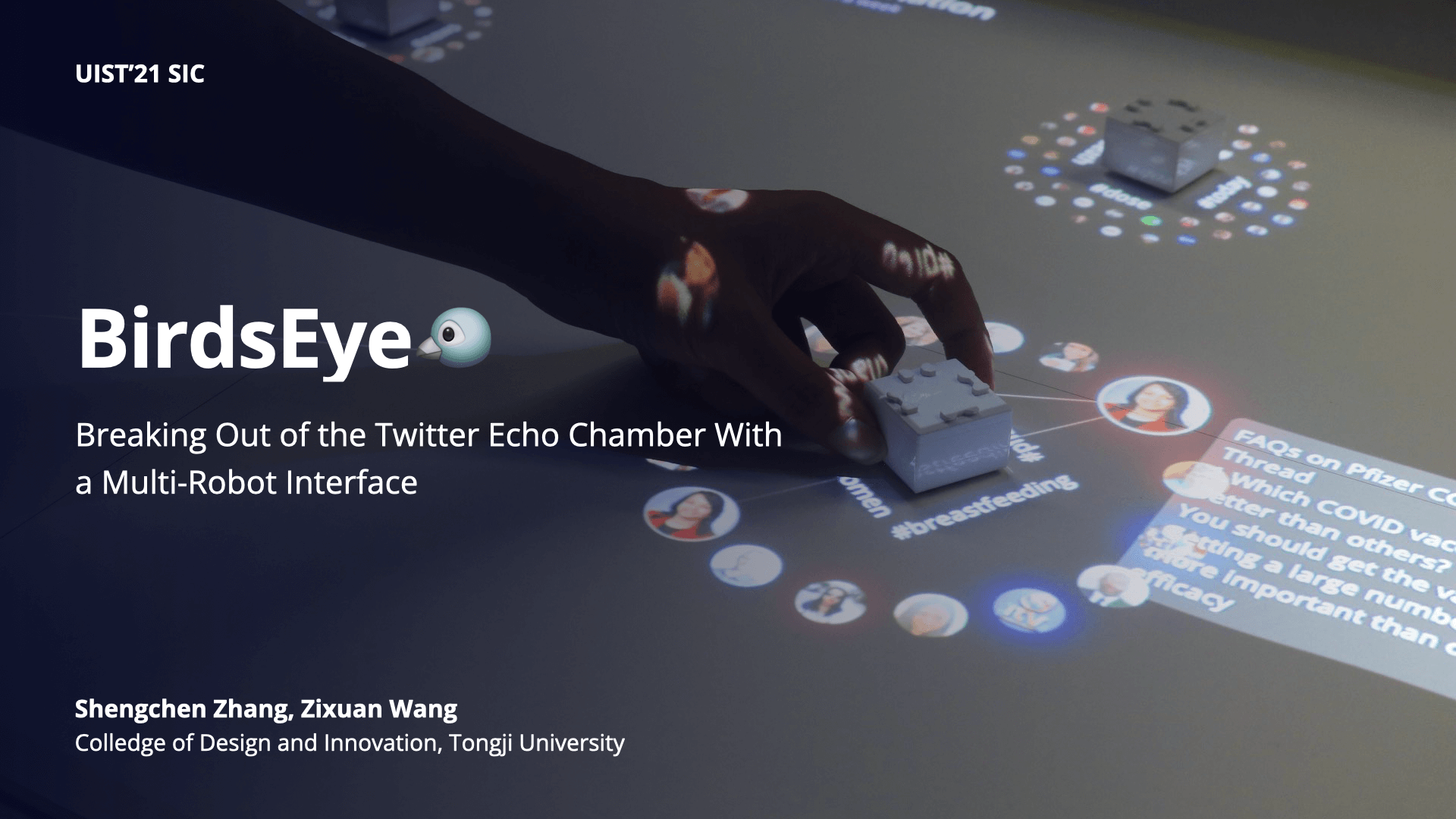

BirdsEye helps you break out of the Twitter echo chamber with a multi-robot interface.

Social media sites are an increasingly popular place for news and public discourse. However, the current algorithmic recommendation is creating echo chambers that limits the users to similar posts they tend to like, which may result in social polarization and extremism[1] [2].

By utilizing a projector and toio robots, we present a new way of browsing social media content that gives the user a bird's eye view of all the different viewpoints regarding a topic and enables the user to directly interact with the algorithm.

The user can cluster, browse, link, and regroup social media posts using intuitive interaction with the robots by moving, flipping, joining and tapping the robots. By directly interact with different viewpoints and the clustering algorithm, our application can help the user break out of the echo chamber and gain a comprehensive view on a topic.

To learn more, read our contest submission!

We just finished our demo session at UIST'21. Check out our code and video (or click here if you prefer YouTube)!

Behind the scenes

BirdsEye is a collaborative project with Zixuan Wang (@melody_orz) for the UIST 2021 Student Innovation Contest. This year, the hardware they provide is the toio robot, a set of small desktop robots that can move, rotate, play sound, and show colors.

We explored many interesting possibilities for these little robots, such as a communication tool for long-distance relationships, an embodiment of a beloved person, a research/office assistant that passes files and information around (which is pretty close to our final idea!), and a sandbox for people to communicate feelings and heal together.

In the end, we wanted our solution to tackle issues that are most relevant to our current state of life. Thus came BirdsEye, a new way to browse the site of the blue bird[3].

We had fun designing various interactions with the toio robots. You can flip it as if reading the back of a card (to see details of a tweet), you can pour stuff out of one robot into another… And many more.

In all, we hope our work can reduce the echo chamber effect though an engaging alternative to filtered feeds. We also see this as a step towards making machine learning algorithms more transparent and democratized, by making the algorithm and data tangible.

Next steps

We just finished our demo session at UIST'21. Check out our code and video!

(Update: We're selected as one of the twelve teams going to UIST'21!)

(Original text: We're waiting for the selection results right now, which comes out August 9th — only a few days away! If selected, we'll have until october to build a real-life demo of our system, and showcase to everyone at UIST 2021.)